Artificial

Intelligence

(From my science essay

collection How Do we know? )

by

Kenny A. Chaffin

All Rights Reserved © 2013 Kenny A. Chaffin

The first

Alien Intelligence we meet may not be from another planet, but from our own computer

labs. Many of us walk around with a computer in our pocket capable of

listening, parsing, and responding – sometimes even correctly – to our voice. We

Google for information by typing in phrases, sentences or disconnected words

and the artificial intelligence in Google’s search engine almost always comes

back with what we are looking for. These dedicated applications are on the

verge of intelligent behavior and could certainly in their domain be called

intelligent. Other systems are even more so and in some cases demonstrate more

generic intelligence such as the Watson system from IBM that recently defeated

the all-time Jeopardy! champions. So how soon until we get to meet these Alien

Intelligences of our own creation? We could see them perhaps within the century

and almost certainly (provided we don’t kill ourselves off or get whacked with

an asteroid) by next century. A Watson-like system is being rolled out by IBM

to assist in medical diagnosis. Google, Google Voice, and Siri will continue to

improve. New research into machine learning, user interfaces and the human

brain are being brought from the lab into practice. It’s been a long and bumpy

road, at least by technological progress measurement since that first Dartmouth

conference on machine learning in 1956 when Marvin Minsky boldly predicted that

"within a generation ... the problem of creating 'artificial intelligence'

will substantially be solved." Other bold predictions followed every few

years, then every decade until with little actual success the predictions

stopped. In some ways that was when the work really began. And that work as

often happens emerged not from the expected avenues, but from the back allies

and offshoots of other research.

Artificial

intelligence is defined as a branch of computer science dealing with the

simulation of intelligent behavior in computers. John McCarthy, one of the

Dartmouth conference organizers who coined the term defines it as "the

science and engineering of making intelligent machines." The founding idea

was that the central feature of humanity – intelligence – could be analyzed,

described and simulated by a machine. The core issues of accomplishing this

have to do with perception, communication, analysis of sensory input,

reasoning, learning, planning and responding to real-world events. The ability

to perform these functions in a general manner (like a human) is known as

Strong AI though much of the work and research is done as subsets of the larger

goal. There are a number of associated areas as well such as neuron simulation,

learning theory and knowledge representation.

The key of

course is understanding intelligence, what it is, what it does, and perhaps

even why it does what it does. But it is a bit like art or pornography – “I may

not be able to define it for you, but I can certainly tell you what it is when

I see it.” There are many often disparate definitions of intelligence, but what

seems to be the core is problem solving. It involves identifying a problem or

obstacle, seeking or creating a solution, applying that solution and then

evaluating the result. The evaluation provides a feedback process to inform

future decisions.

The

artificial intelligence field got its start at the Dartmouth conference with

four key figures – Alan Newell, John McCarthy, Marvin Minsky, and Herbert Simon

all of whom were computer scientists with exception of Simon who was more of a

psychologist/sociologist as well as being knowledgeable in other disciplines. All

of them were in fact somewhat cross-disciplinary. This idea of building a

machine capable of human intelligence came not long after the advent of the

first practical computers. Given the broad capabilities exhibited by computer

programming as a result of the Von-Neumann architecture based on Alan Turing’s

mathematical concepts it seemed quite possible to program a computer to emulate

human intelligence and decision making. But oh what a tangled web was to be weaved

from this.

Throughout

history there have been numerous attempts, desires, stories and examples of building

or bringing inanimate objects to life. There are clockwork robotic devices, statues

and puppets and trees that came to life (Pygmalion, Pinocchio), the mechanical

devices built to simulate/emulate/recreate human behavior sometimes even with

dwarves or children inside to huckster the crowd, and back beyond even that to the

oracle at Delphi. Given all the literature, myths, stories and actual devices

there must be something very deep in the human psyche that longs to re-create

itself. Perhaps it is even down to the genetic drive of reproducing,

recreating, perpetuating ourselves, perhaps a kind of genetic imperative drives

our attempts, our need to explore artificial intelligence.

Nevertheless

the work seriously got underway following the Dartmouth conference and there

was serious money behind it, primarily funded by the departments of defense in

the United States and in Britain as well as Russia and other world powers of

the mid-20th century.

The

perceived promise led Darpa to invest approximately $3 million a year from 1963

to the mid 1970’s. Similar investments took place in Britain. Darpa of course

was looking for potential military applications during this cold war time of

tension around the world. The promise and the culture of the time though led to

a devastating situation. The funds flowed with little oversight and the field

went in many directions that resulted in little applicable output. This was the

early days of computers and the algorithms and programs designed to emulate

things like human logic and reasoning were quite complex and resource

demanding. They did not work well on the hardware available at the time. Either

the programs had to be scaled back and limited in their scope or very long

time-frames had to be allowed in order to get results. Neither came close to

approaching the abilities of a human brain on any level. Sensing capabilities

such as vision and audio which were being worked on as a subset of the AI

problem required massive programming just to acquire and manipulate the data

into a form that could be used by the AI components.

By the mid 70’s the faltering field

was stripped of funding and mostly dropped. This in now known as the first AI

Winter and would last almost a decade until the early 80’s. Some work continued,

but without the freely flowing funds it was much more focused and more a labor

of love rather than more random experimentation. During this time as well much

criticism was leveled at the computer scientists by other academic departments.

Philosophy, psychology, biology and mathematics all took shots, but by the same

token they were all interested in the field that they had been shut out of in

this early phase and as a result many research institutes began bringing

together diverse cross-disciplinary groups to work on the research as well as

providing means for them to work better together. As a result we get learning

specialists helping to design computer learning applications. We get knowledge

management experts helping to devise search and storage hardware and software.

And we find neurological experts working with programmers to simulate neural

networks.

This ‘background’ research led to

the next step in AI - Expert systems,

which were the rage in the 80’s. An expert system was intended to be a subject

matter expert in a specific or limited domain. It incorporated a knowledge base

and a means of searching and retrieving (as well as updating) information. This

lead to a boom in database research and development. Computers were rushing

along following Moore’s Law of doubling capabilities every two years. This

allowed for more complex search algorithms which were ever faster as were the

database search and retrieval. The programming language of choice for these

systems was Lisp a symbolic manipulation language thought to better model

thought processes, symbolic manipulation and such. Certainly there was some

success for these expert systems but again the result failed to match the

expectations and once more the field floundered.

In the meantime Japan initiated the

Fifth Generation Computer Project which was intended to create computers and

programs that could communicate using natural language, do visual processing

and recognition as well as emulate human reasoning. They dropped Lisp and chose

a newer language Prolog as the core programming language perhaps to leave the

old ways behind and start anew. Other countries responded in kind to this ‘threat’

of computer dominance. During this time much work was beginning to focus on

neural networks and emulating the brain in hopes of breaking free of the

step-by-step von-Neumann style of programming. This took place (and continues

to this day) in both hardware and software. Emulating the workings of

individual brain neurons as well as connecting them in the manner of a

biological brain. But again the lack of substantial applicable results to

business or military uses brought on another ice-age. The second AI Winter

lasted from the late 80’s to the mid 90’s.

By this time the field of robotics was

rising particularly due to the use of robotics in assembly factories such as

car manufacturers and electronic assembly plants. There was money to be had for

robotics research and a new slant on the AI field emerged. By providing the

means to these factory robots to handle ambiguity, recognize defective parts,

to align and assemble them properly without supervision or through extremely

precise programming and logistics provided a new venue to AI. It wasn’t just

emulating human intelligence and reasoning, but performing the tasks a human

would do in a real-world assembly factory.

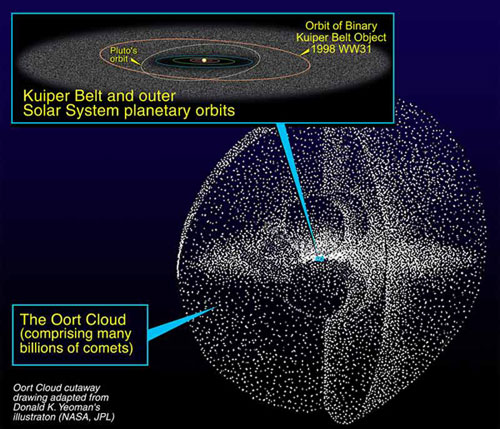

A separate but similar revolution

was taking place in space exploration. Our robotic probes to Mars, Venus,

Saturn and the outer planets were being designed with increasingly autonomous

and error-correcting capabilities. These robots – rovers and probes of various

styles had to operate autonomously in much more demanding and dangerous

situations than the factory floor. NASA and the military funded some of the

best minds, universities and corporations to build these mechanical emissaries

to the cosmos.

There

was a completely different revolution taking place during these years as well.

From the mid/late 80’s the business demands for data storage and retrieval have

exploded like a sun going nova. This fueled much database research and even

special purpose hardware research such as Teradata and Britton Lee database

machines. The advent of the internet brought search engines to the fore and the

star of course that emerged was Google now a household name/word and verb

equivalent to internet search. The massive data problem is far from solved, it

continues to grow. Everything has gone digital. Businesses store all their

corporate data digitally, our space telescopes produce massive amounts of data

as do research projects such as the Human Genome Project and other DNA and

biological analysis research as well as the recently announced Human Brain

Mapping initiative. This issue is known today as the Big Data problem and

significant amounts of cash from government and private industry are pouring

into managing the problem. The results of this are applicable as well to AI

research because one of the obstacles is providing and managing the amazing

amount of information storage required to emulate a human brain.

A human brain has about the same

number of neurons as there are stars in the Milky Way galaxy – about 100

million. And each of these neurons may be connected to thousands of other

neurons. This creates an amazingly complex multi-processing system that is not

only difficult to emulate but requires computing capabilities that are

currently beyond present-day systems. We may see it in the next half-century

though.

All of these areas of research and

application are at the fore-front of today’s computer, information and

cognitive science. Google is increasingly capable of parsing and analyzing

natural language inputs and providing (in extreme short time-frames) relevant

results. We have cell phones that are capable of processing speech input and

providing similar search results or actions based on the spoken words. Our

robots are exploring Mars, Voyager (launched 35 years ago) is still functional

and approaching the edge of interstellar space. It requires 20 minutes for

radio messages to travel to or from it. The autonomous land vehicle trials by

Darpa and Google continue. Google’s vehicle has already been given approval for

commercial operation of these vehicles in several states.

It seems we are now approaching

real AI – the capabilities of humans from several oblique angles following

failures of direct methods of programming rational decision making, expert

systems, and embedded logic. It seems that real AI is coming not from the

research labs, but from the factory floor, our autonomous space probes and

vehicles, and from our information management needs. We continue to attempt to

emulate the physical structure and workings of the human brain but some of our best

results are in our pockets -- our cell phones with voice-actuated access to the

world’s knowledge at the tip of our tongues.

References/Resources/Links

Artificial Intelligence:

History of Artificial Intelligence:

Watson:

Human Brain:

Human Brain Mapping:

Blue Brain Project:

Neural Network Software:

Expert Systems:

Strong AI:

Big Data:

About the Author